Divisive Hierarchical Clustering Essentials

Hierarchical clustering [or hierarchical cluster analysis (HCA)] is an alternative approach to partitioning clustering for grouping objects based on their similarity. In contrast to partitioning clustering, hierarchical clustering does not require to pre-specify the number of clusters to be produced.

Hierarchical clustering can be subdivided into two types:

Agglomerative clustering in which, each observation is initially considered as a cluster of its own (leaf). Then, the most similar clusters are successively merged until there is just one single big cluster (root).

Divise clustering, an inverse of agglomerative clustering, begins with the root, in witch all objects are included in one cluster. Then the most heterogeneous clusters are successively divided until all observation are in their own cluster.

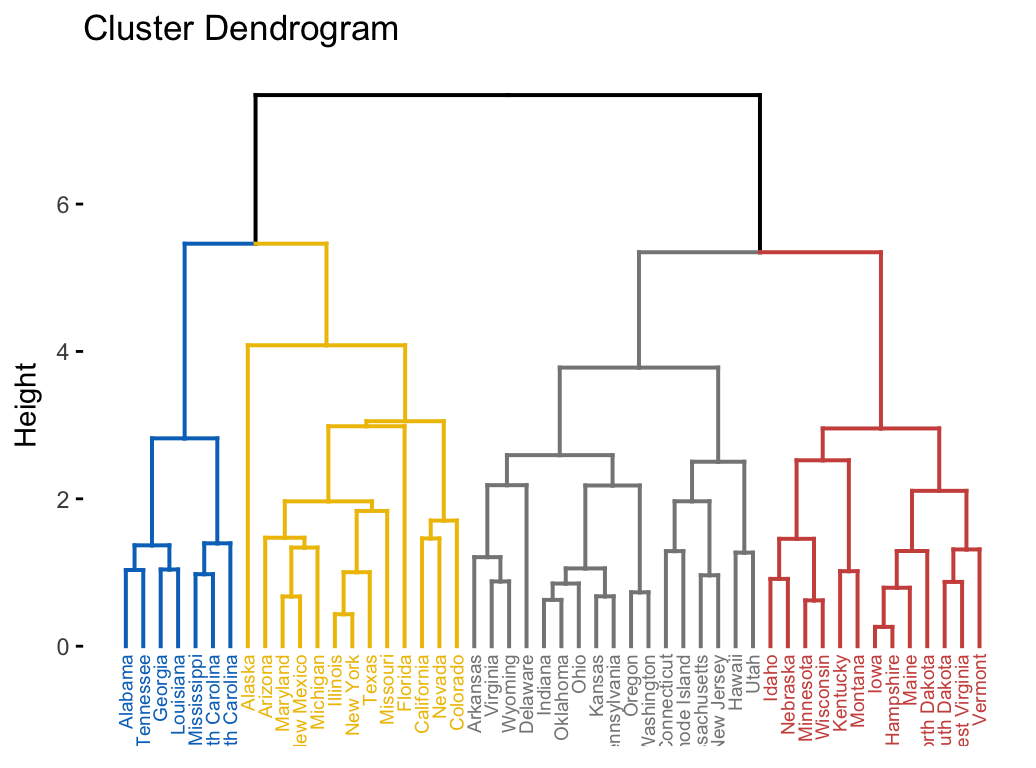

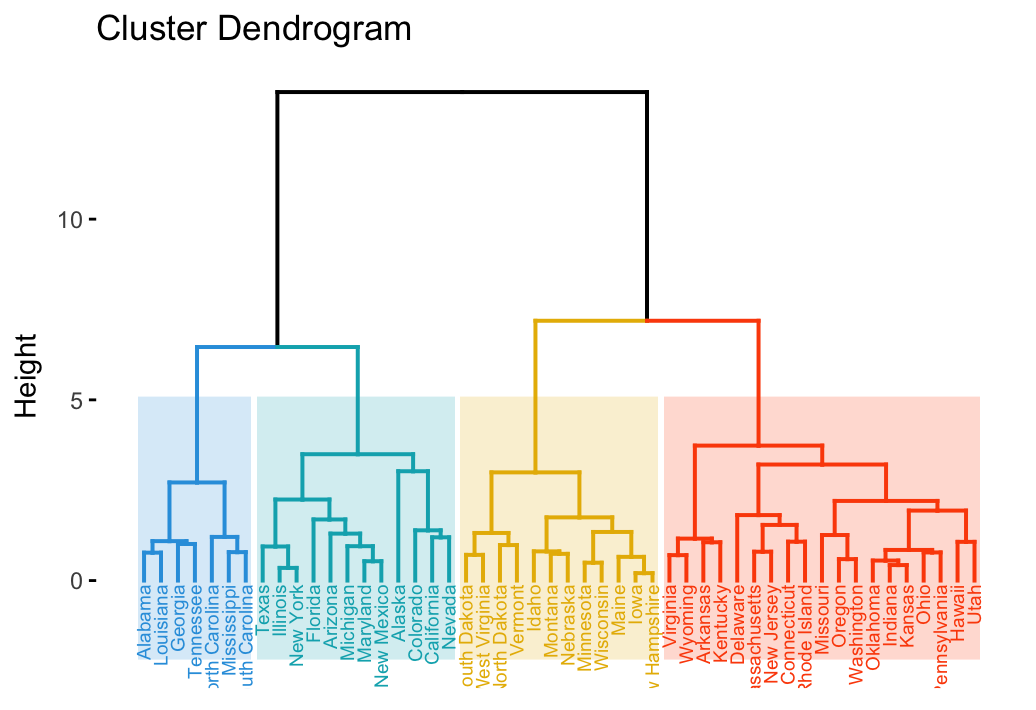

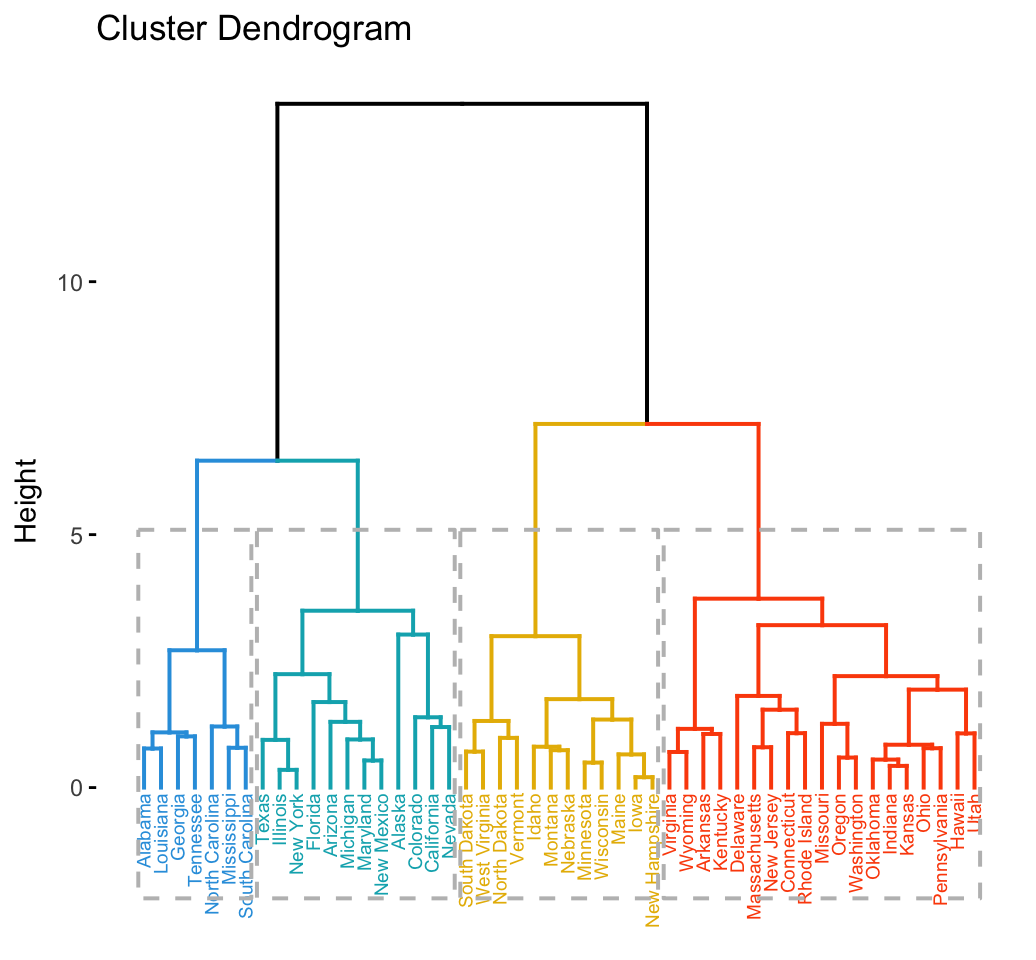

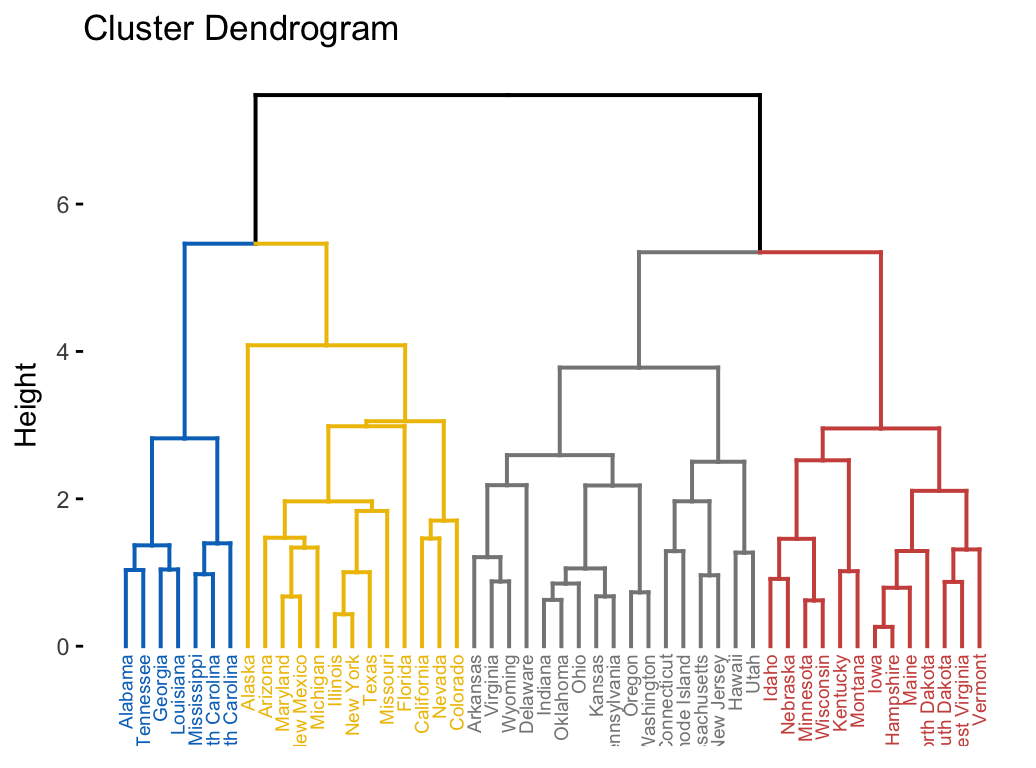

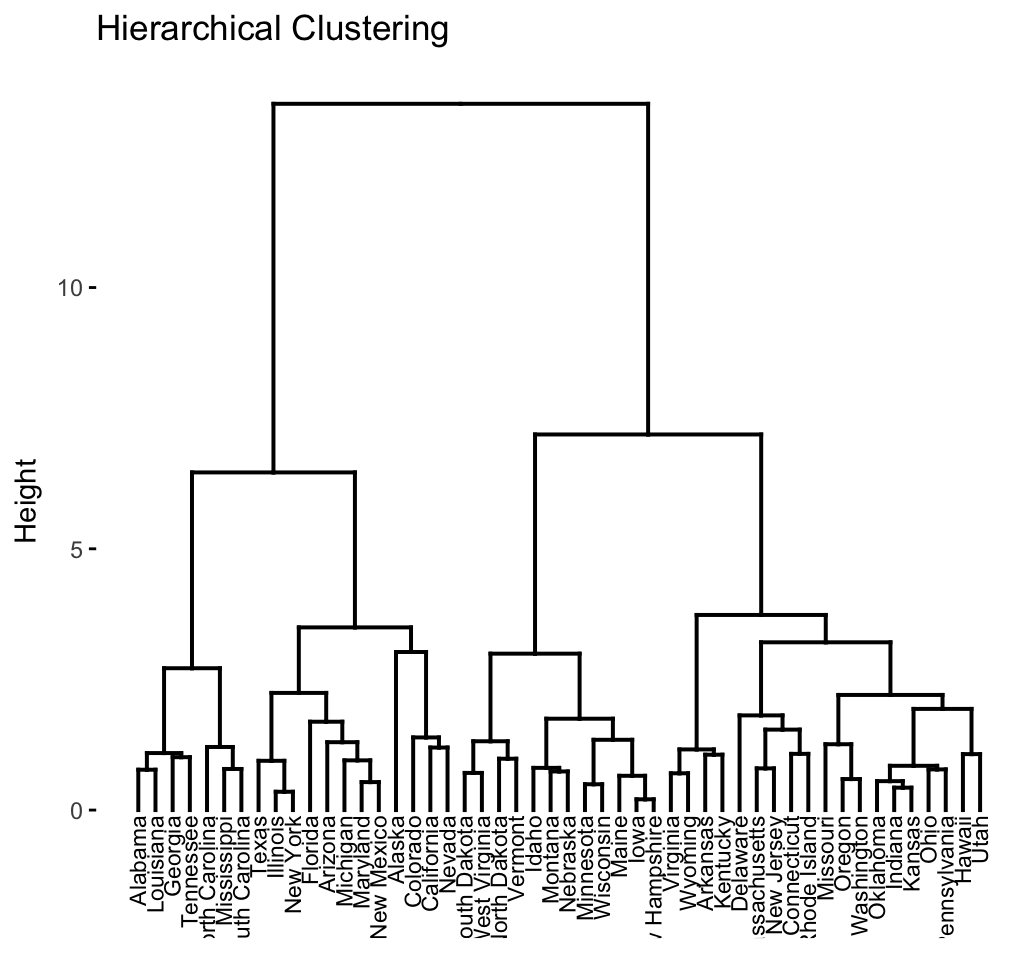

The result of hierarchical clustering is a tree-based representation of the objects, which is also known as dendrogram (see the figure below).

The dendrogram is a multilevel hierarchy where clusters at one level are joined together to form the clusters at the next levels. This makes it possible to decide the level at which to cut the tree for generating suitable groups of a data objects.

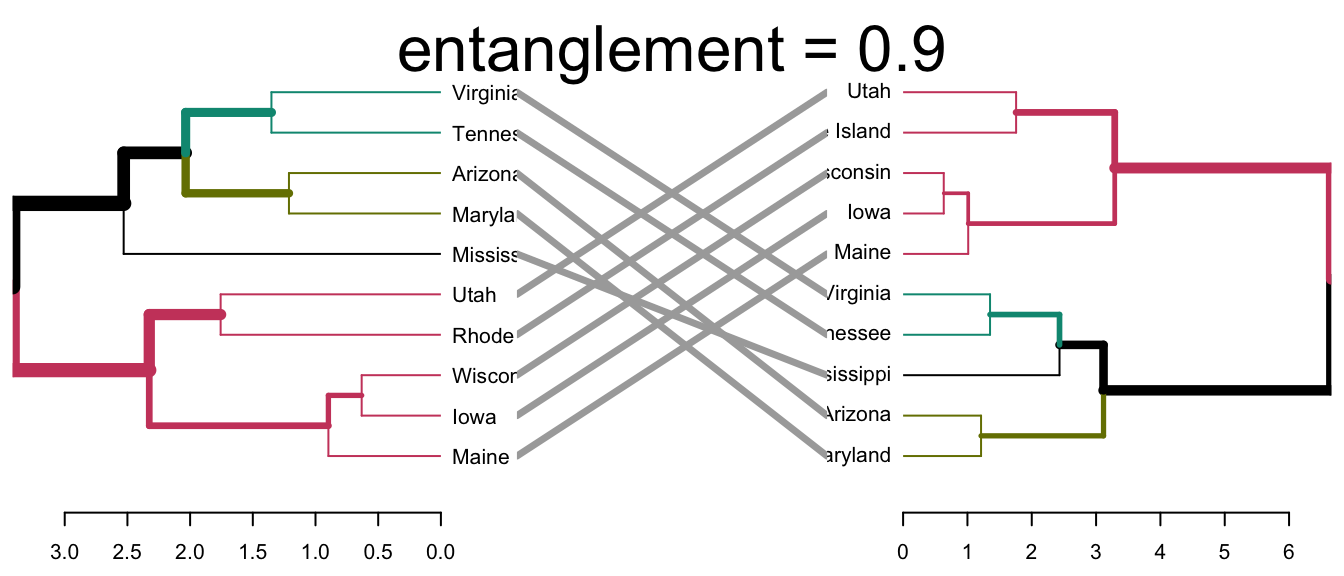

In previous chapters, we defined several methods for measuring distances between objects in a data matrix. In this chapter, we’ll show how to visualize the dissimilarity between objects using dendrograms.

We start by describing hierarchical clustering algorithms and provide R scripts for computing and visualizing the results of hierarchical clustering. Next, we’ll demonstrate how to cut dendrograms into groups. We’ll show also how to compare two dendrograms. Additionally, we’ll provide solutions for handling dendrograms of large data sets.

Contents:

Divisive Hierarchical Clustering

Contents:

Contents:

Contents:

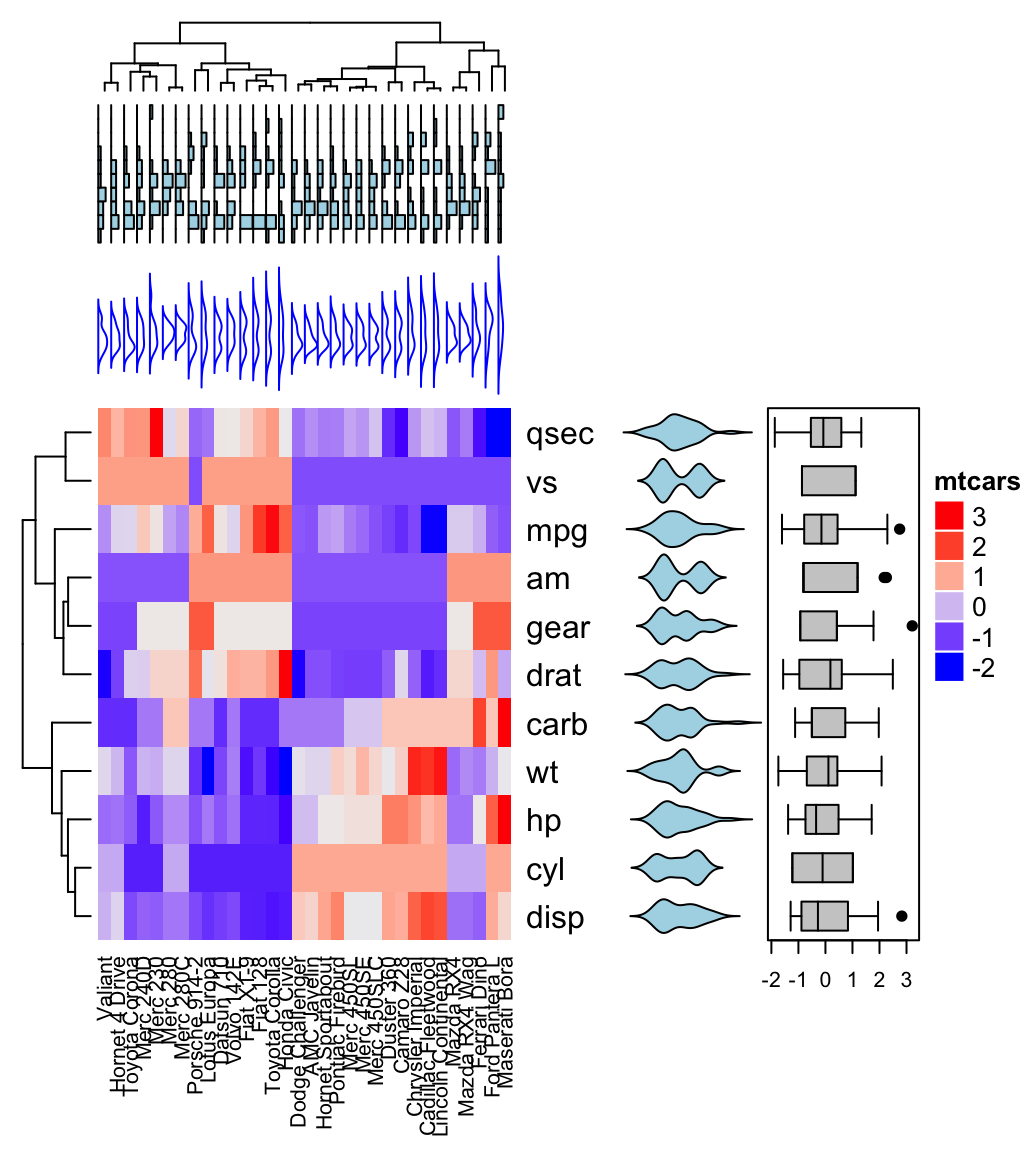

Heatmap: Static and Interactive

Contents:

Related Book: